TLDR: my overall rating the course is about 7/10. It was fun doing it, I’m happy I completed all but I’m also glad I didn’t pay for it out of my own pocket.

- the good:

- (1) gives wide understanding of many components that make AV architecture

- (2) a lot of material that requires quite some effort to finish (tho clearly not exhaustive or comprehensive of AV domain / state of the art)

- (3) for most part well maintained and professionally produced learning material

- the bad:

- (1) at times very uneven quality of course material

- (2) half-year completion time for somebody doing this after hours is very very understated

- (3) doesn’t push too deep into any particular topic (this could be good ;p)

- (4) practical skillset only as good as it can get from academic course (i.e. doomed to be outdated; in practice never enough for very competitive industry)

- (5) the marketing spin on how great this course is for job-finding is a bit annoying (and imho obviously untrue)

- (6) course structure could be better

Udacity nano-degree?

About half a year ago I started a course from Udacity on Autonomous Vehicles. It’s labelled a “nano degree” and (in theory) takes about half-year to finish. Essentially, it’s a glorified online course with videos/text/coding exercises that are supposed to introduce the student to AV area. Kind of like a full semester course at university. Just that less theory, more practice, yet still packed with material and has long time-frame so ‘nano-degree’ is sort of a justified marketing stunt 😉

Udacity is among the big online learning platforms such as Coursera or Skillshare but its founder is known for his contributions in AV research area and has background in robotics so I always looked at this course with interest. It’s been online quite long actually (since 2012) but I didn’t want to pay for it myself before. When the opportunity came along and I got it sponsored I decided to go ahead!

Why do the nano-degree?

For me, the goal was to do something interesting after hours. The entire self-driving hype works a lot on my imagination and I’m a believer that sooner or later it will materialize. I wanted to see how in practice the technologies I deal with every day professionally (machine learning, deep learning) are applied for robotics and AV. Also, to check out hands-on if any topics from this area will feel right for me and if any would be interesting to get into long-term.

Course overview

Course consists of two big blocks: (1) first is more vision/ sensor oriented; (2) second towards robotics and steering the car. Within each block the general structure is some lessons consisting of text/video tutorials mixed with small coding exercises. After every few lessons there is a bigger project that involves more coding to solve some practical AV problem. Frequently, the coding does not really start from scratch – there are some source code templates and the students needs to fill out some missing functions. Overall, for an online course I would say that’s as good as it gets.

My general gripe here is that the entire course should be preceded with a module that gives an overview of entire domain (ie. car subsystems) and connect projects to individual blocks. This is informally done at the end but very briefly. Also, I think each learning module should have some short initial lesson giving brief state of the art overview – unfortunately that is missing (aside of research paper links but without any useful/ curated explanations). Ideally, as a student I would like to know what’s the current cutting edge; what’s used in the industry/academia and how my implementation relates to the state of the art. It’s obvious that 6-month course cannot cover the most advanced techniques but some executive summary of solutions landscape would make things a lot more clear.

Project assignments review

Below I give my own subjective review of all projects in the course. This can give a high level idea of what the learning materials pertain to. I also provide GitHub links to my solutions for every project.

| No | Project | Rating | Technology | Comments |

| 1 | Lane Detection | 7/10 | – Canny edge detection – Hough transform | Objective: Given images/video of road detect lanes using Computer Vision approach -A lot of fun given this was the first project. Lots of tweaking and adjusting. |

| 2 | Adv. Lane Detection | 8/10 | – Canny edge detection – Hough transform | Objective: Harder version of previous project. The video contains road with shadows, light reflections, big curves, obstructions etc. – At some point starts getting boring and tedious all tuning but still feels like learning interesting things and there is a sense of accomplishment as tasks are more difficult than previous project and results look better too. – (*not a course drawback but Computer Vision) Overall one thing that feels futile is how fixed all the settings are accounting for specific conditions of the videos (and the thought that with new video it would break). |

| 3 | Traffic Sign Detection | 7/10 | – Machine Learning – Deep Learning | Objective: Typical image classification task. Given road sign photos, detect which sign is it. This skips the object detection part (ie. bounding boxes), images of signs are already extracted from the photo. Goal is to achieve certain accuracy. -Decent amount of own coding and tuning. Quite fun project. I would probably rate higher, if i wasn’t biased with doing ML research as my day job. |

| 4 | Behavioural Cloning | 6.5/10 | – Machine Learning – Deep Learning | Objective: Given road curvature angle decide what should be the steering angle of the car. This is done with deep learning, goal is to drive car around a track without going out. -A lot of copying, little tuning for the basic track. Similar as previous project, I’m probably a bit more biased here in comparison to regular Udacity student. |

| 5 | Sensor Fusion | 3/10 | – Numerical Methods – Signal Processing – Kalaman Filter | Objective: Estimate position of moving objects around the car based on measurements from radar and lidar. -Copy-paste all the way, the lessons before were too much math and not very well explained. The project has a lot of already completed code, the simulator and server clunky to run/test/debug. |

| 6 | Kidnapped Vehicle | 6/10 | – Numerical Methods – Signal Processing – Particle Filter | Objective: Locate the position of moving car, given distances and positions of objects from car surroundings. – Project is fun to implement but the explanations of how to implement Particle Filter are poor, I think could be done a lot better. The code template and variable naming inconsistent and makes coding harder rather than easier. The project code seems to have been updated over the years and is inconsistent with the course instructions. – (*not quite course issue) Similar as Kalaman Filter, the Particile Fitler is a numerical method, therefore bug tracking can be a nightmare, small issues cause the entire solution to go way off. – Finally the simulator is a resource hog, in previous projects it didn’t matter much but in this project, there is a time requirement. Therefore, running the simulator and algorithm on the same machine causes a lot of bias. |

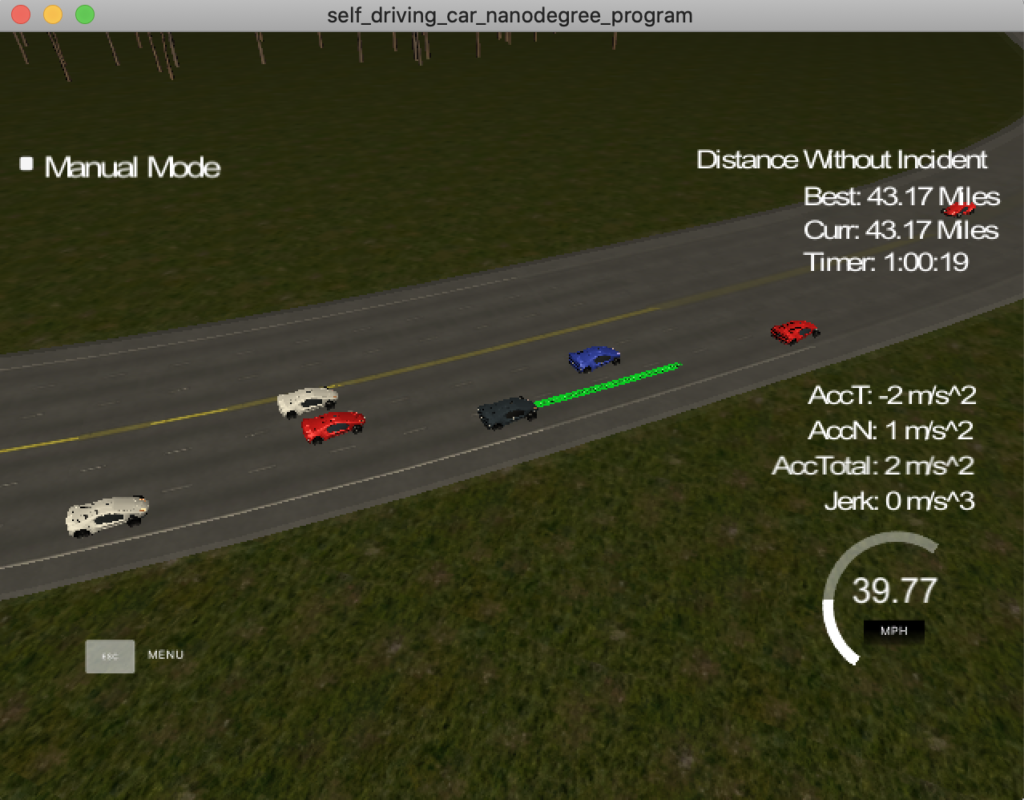

| 7 | Highway driving | 7/10 | – Path planning – A star – Dynamic Programming | Objective: Calculate car speed and trajectory during highway driving scenario, including lane changing. – Lessons leading up to this project seem very inconsistent (e.g “Lesson7: Search” – assignments and solutions are out of sync) – Aside of the drawbacks I found this project fun, it the simulator worked better I think that project would have a lot more potential. |

| 8 | PID controller | 5/10 | – PID controller – Twiddle algorithm | Objective: Implement car steering (ie actuation), this is same task as project 4 but non-ML approach. – The start of the project was interesting, especially to see how very very few lines of code manage to drive the car in track! – Afterwards I spent quite some time on improving the initalizaation and implementing Twiddle but that did not give too much effect, which was quite demotivating – Overall, this project feels a bit like the Lane Detection: so much depends on some “magic” parameter settings that it feels a bit impractical. |

| 9 | System Integration | 2/10 | – Implement ROS packages | Objective: Drive car on a test track. Implement some small bits of various car subsystems. – In nutshell I find this project is less on algorithms more on learning how to code using ROS framework. There are some more technical bits too that add to difficulty, especially if one didn’t follow Udacity walkthrough and wanted to do some optional material like traffic light classifier. – My overall impression is this was sadly the worst project assignment of them all. Mainly due to big hardware requirements and therefore big problems running ROS and simulator side-by-side. In previous projects I already had problems with simulator now my hardware being additionally hammered by the ROS – everything is extremely frustrating. – The only fun/interesting part was learning a bit about this ROS framework in a hands-on tutorial fashion which went beyond the regular ROS Gazebo simulator. |

Overall, I think the course quality is best for initial modules. I had most fun with with both Lane Finding projects (tho all the parameter setting frustration tells me old school CV might not be the thing for me). In line with my professional interests both machine learning projects were quite cool too, although I felt like “not enough”. Finally, from second part, I liked Highway Driving project a lot, sometimes frustrating but a lot of fun. After completing felt like I could dig a lot more into this.

If i had to point out the single biggest gripe of all project – the simulator. It’s quite bad. I’m guessing the intention was to make projects more visually appealing but due to performance issues this ends up being very very frustrating. I was running the simulator on either Macbook Pro or Surface Book 2 with GPU and super annoying on either (and its not like the simulator has some ‘next-gen’ graphics).

Wrap up

I suppose the best recommendation I can give this course: in comparison to regular academic courses that I had during my university years , this seems just about there in terms of quality. Lecturers are competent and learning material is quite decent; albeit lesson to lesson that quality goes up and down, especially the project assignments. I think this is due to some modules being refreshed after years and some being old ones.

The marketing spin about amazing skillset the course gives is blown quite out of proportions. I doubt anybody believes the advertising Udacity does related to landing a great job after this course but it’s a bit annoying to keep on listing to it throughout the course. Likewise, the lecturers giving some odd over-the-top appraisal on how big accomplishment it is to finish an implementation of this or that algorithm. It’s obvious why Udacity does it but I find it unnecessary and irritating.

One rather demotivating bit that I started to notice about middle of the course – the learning material (videos, text, coding exercises) end up not contributing to the project that comes after. Sometimes there is quite an in-depth study of some algorithm with lots of maths etc but the project that comes after ends up completely not using it.

Another issue: finishing the course within the recommended half-year time requires a lot of effort. I think it’s quite impossible if you do the course after work, maybe ~2-3 hours a day. Quite a few times, I decided to skip optional assignments because I knew I wouldn’t be able to finish the entire course on time otherwise. Likewise, I totally wanted to go deeper on some subjects that interested my but had no time. You could say I could just take it easy and go past the 6 months mark but the trick is access to the course is time-based – after the initial 6 months, you need to pay again to have full access 🙂